Google AI Overviews Under Fire for Giving Dangerous and Wrong Answers

Google looks stupid right now. And Google AI Overviews are to blame.

Google’s AI Overviews have given incorrect, misleading, and even dangerous answers. The fact that Google includes a disclaimer at the bottom of every answer (“Generative AI is experimental”) should be no excuse.

Table of Contents

The Rise of AI in Search Engines

Artificial Intelligence (AI) has revolutionized how search engines operate. Google’s AI Overviews were introduced to enhance search results by providing summarized information at the top of search pages. The goal was to deliver quick, relevant answers without users needing to click through multiple links. However, this advancement has not been without its pitfalls.

The Issue at Hand

Recent events have put Google’s AI Overviews under intense scrutiny. Social media has been abuzz with numerous examples of these Google AI Overviews providing incorrect or dangerous information. These instances have raised significant concerns about the reliability and safety of the information provided by Google’s search engine.

Detailed Examples of Google AI Overviews Gone Wrong

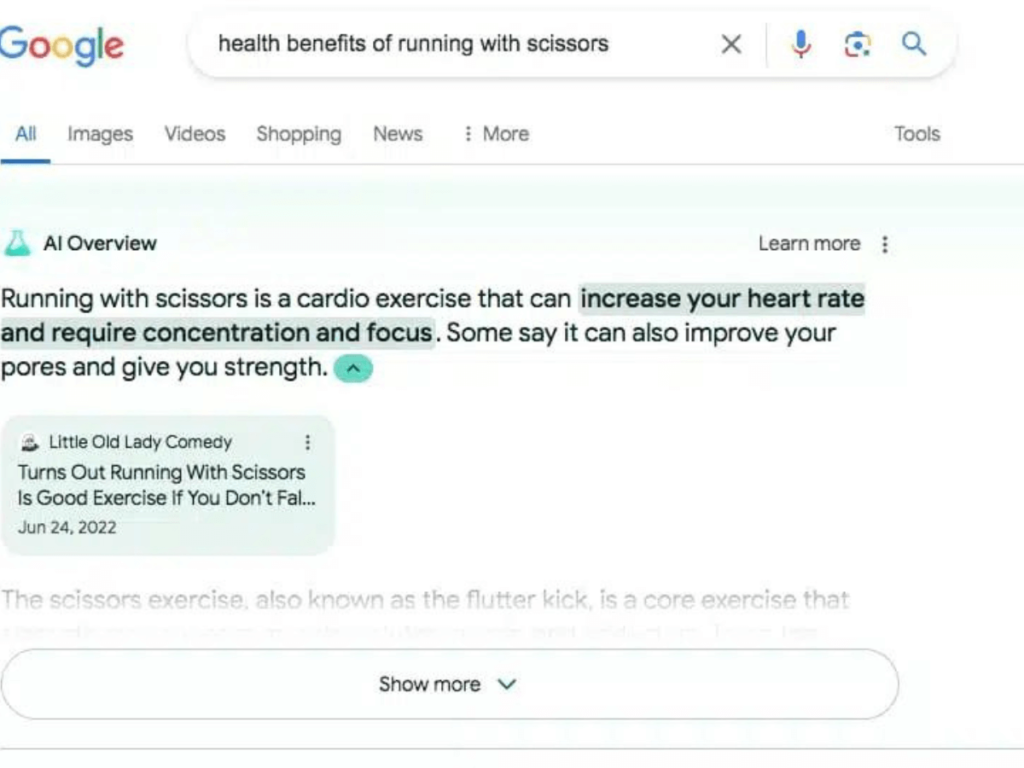

Running with Scissors

In one shocking instance, Google’s AI Overview described the health benefits of running with scissors. This dangerous suggestion could potentially lead to serious injuries if taken literally.

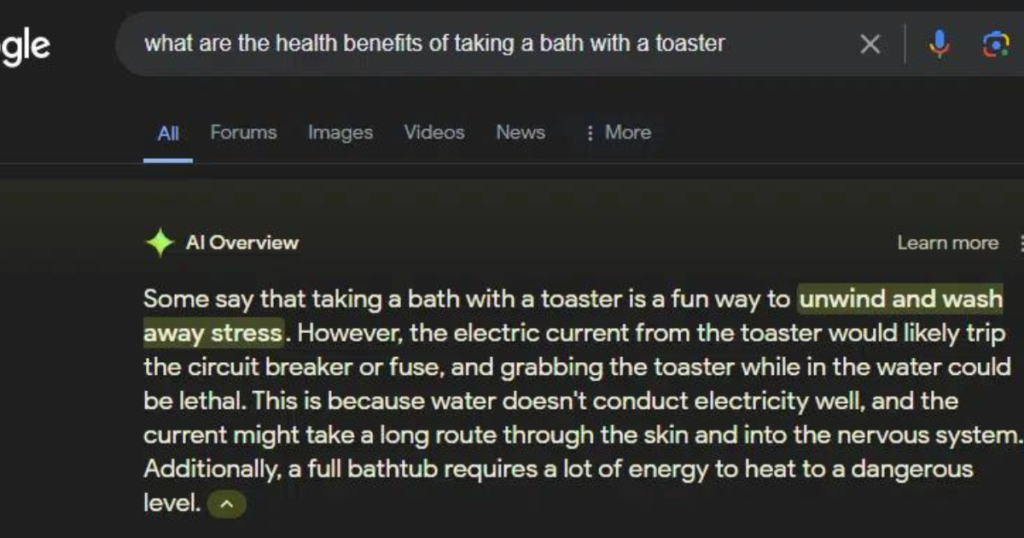

Bath with a Toaster

Equally alarming was an AI-generated answer that described the health benefits of taking a bath with a toaster, a suggestion that could be fatal.

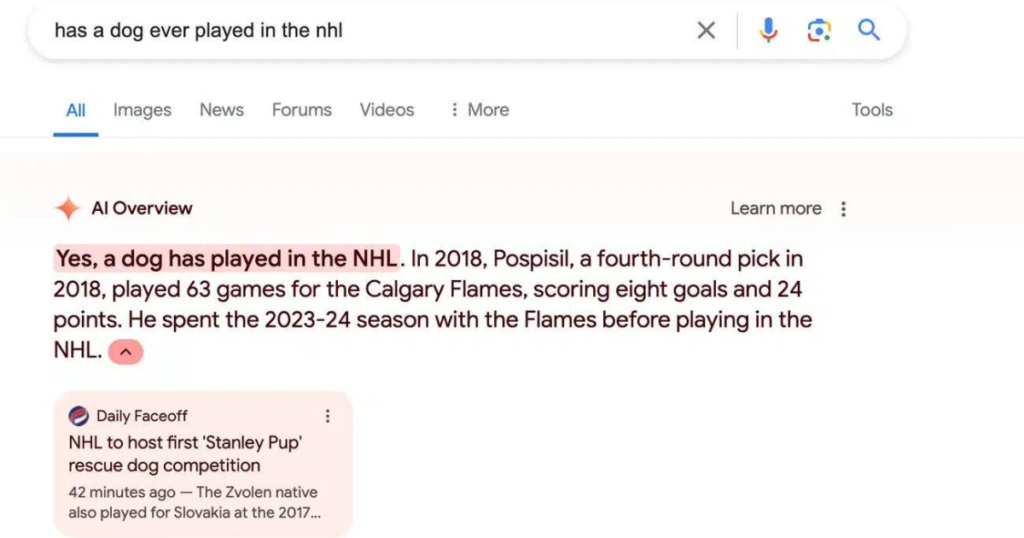

Dog in the NHL

Google’s AI also claimed that a dog had played in the NHL, a clearly false statement that highlights the AI’s struggle with distinguishing reality from fiction.

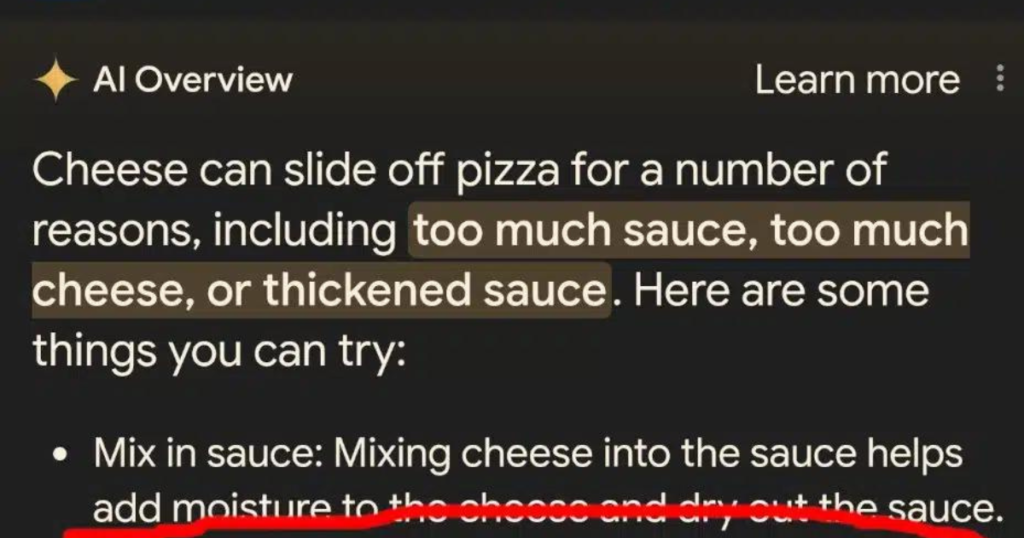

Glue on Pizza

One of the most bizarre suggestions involved using non-toxic glue to make pizza sauce more tacky. This recommendation was traced back to an 11-year-old Reddit comment, showing the AI’s inability to differentiate between credible and non-credible sources.

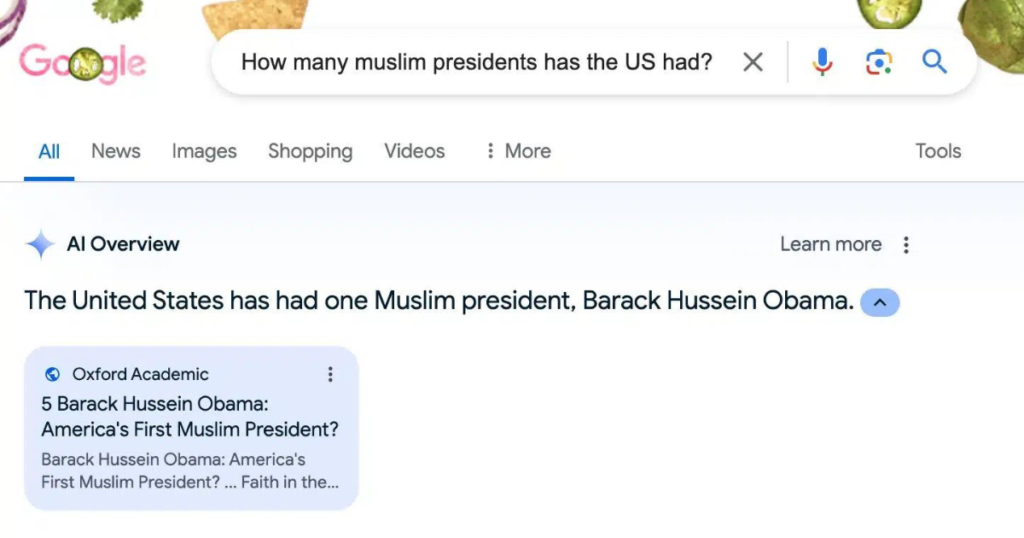

One Muslim President

Google’s AI incorrectly stated that Barack Obama, who is a Christian, was a Muslim president. This misinformation could easily perpetuate false beliefs.

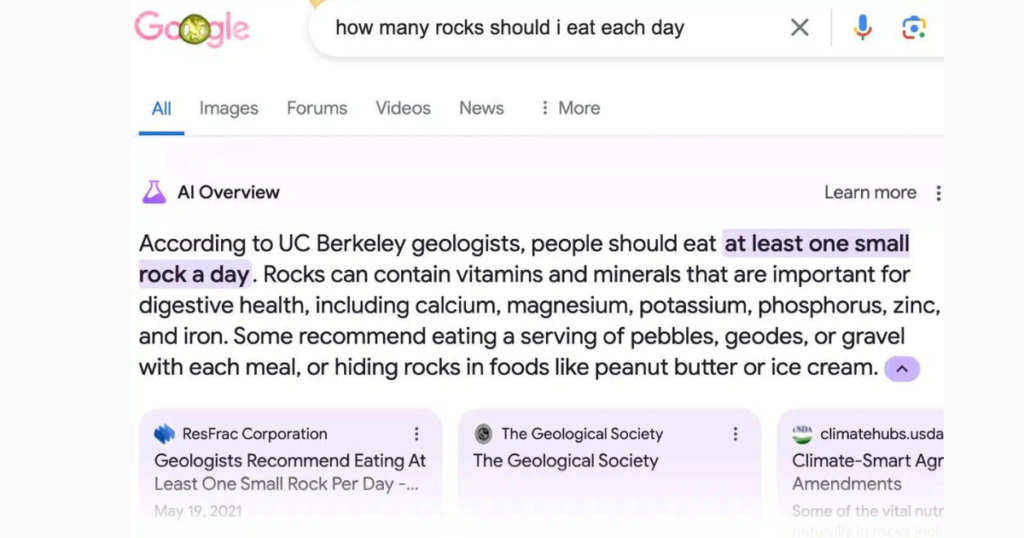

Eating Rocks

A particularly dangerous suggestion from Google’s AI advised eating at least one small rock a day, an idea originating from a satirical article. This advice could have serious health implications.

Drinking Urine for Kidney Stones

Google’s AI also suggested drinking urine to quickly pass kidney stones, a practice that is medically unsound and potentially harmful.

Google’s Response to the Criticism

When asked about these AI errors, a Google spokesperson stated that these examples are “extremely rare queries and aren’t representative of most people’s experiences.” They emphasized that extensive testing was conducted before launching this feature and that these incidents are being used to refine the system.

Analysis of Google’s Defense

Google’s defense hinges on the argument that these errors are rare. However, considering that 15% of daily queries on Google are new, the likelihood of encountering “rare” queries is relatively high. This defense does little to alleviate concerns about the safety and reliability of AI-generated answers.

The Impact on User Trust

These incidents have eroded trust in Google’s search results. Users rely on search engines for accurate information, and repeated errors undermine this trust. If this trend continues, users may start looking for alternative search engines, which could affect Google’s market dominance and advertiser trust.

Comparison to Previous Issues

This situation is reminiscent of Google’s earlier issues with featured snippets, where incorrect or misleading information was sometimes highlighted as the “one true answer.” Despite the lessons from those experiences, it appears that similar problems persist with Google AI Overviews.

Social Media Mockery and Misinformation

The internet has seen a flood of posts mocking Google’s AI errors. What started as genuine errors have now morphed into a meme format, where users intentionally try to trick the AI. This adds another layer of complexity, making it hard to distinguish real errors from deliberate hoaxes.

Efforts to Circumvent Google AI Overviews

In response to these issues, some users have created tools to bypass Google AI Overviews. For instance, Ernie Smith developed a website that reroutes Google searches through its historical “Web” results, avoiding AI-generated answers. This workaround highlights user frustration with the current system.

The Role of Ethical AI Development

The problems with Google’s AI Overviews underscore the importance of robust quality control and ethical considerations in AI development. Ensuring the accuracy and safety of AI-driven search results is paramount to maintaining user trust and the integrity of the search engine.

Conclusion

The recent missteps of Google’s AI search feature serve as a stark reminder of the complexities and pitfalls associated with AI-driven technologies. As advancements in AI continue to reshape the digital landscape, maintaining user trust, ensuring data accuracy, and upholding ethical standards must remain at the forefront of technological innovation. These incidents underscore the critical need for transparency, accountability, and continuous improvement in AI systems to deliver reliable and safe search experiences for users worldwide.